Note that the standard error calculated here is not the within-subjects one. To calculate that, see the page on data visualizations.

What stats are appropriate for comparing paired data?

We can first start by thinking about 2 cases:

1) where a set of numbers (say 30) is pulled from the same normal distribution

2) where a set of numbers (say 30) is pulled from the a different normal distribution

Logically, the two cases should give you different statistics. In case 2, the sets should be significantly different.

But is that the only thing we need to look out for?

Clearly no. We can look at effect size, the power of the test, what Bayesian tests say about the data, etc.

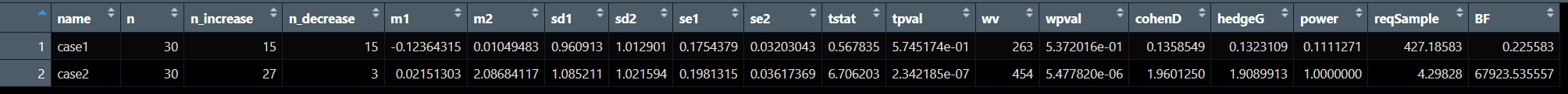

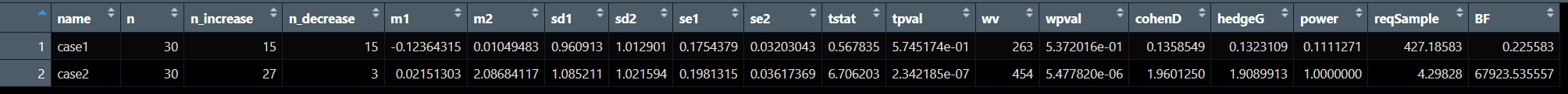

Consider the output from this custom code:

Note that the standard error calculated here is not the within-subjects one. To calculate that, see the page on data visualizations.

Potentially important information might be:

1) How many in set B is increased/decreased from its pair in set A. This tells you if the effect is driven by just a few cases or majority of cases.

2) What the actual group means, standard deviation and standard error are. See the note in the above figure caption though.

3) What the t-statistic and p-values are, assuming a standard paired t-test.

4) How the results would be different if using a non-parametric test (one that does not assume normally distributed data* for example, Welch tests). One could also do normality tests like the KS test normality or Anderson-Darling test

5) What is the effect size? Cohen's D is a pretty standard measure, but one might also want to use Hedges G in some instances (e.g., when sample sizes are below 20).

6) What is the power of the test. In what % of tests would this current sample with the current effect size be expected to give a significant result?

7) Given the estimated effect size, what is the required sample size to reach a power level of at least 80% (which is a standard in psychology research)

8) What would Bayesian statistics say about the data. Is there moderate evidence for the alternate hypothesis (BF > 3)? Is there moderate evidence for the null hypothesis (BF < 1/3)? The latter is particularly useful when t-tests suggest a null result, to know if it is truly a null phenomena or more power is required to say anything substantial.

The above code makes use of a few R packages (e.g. 'pwr','BayesFactor','effsize') to calculate stats for all of these, but as always, the appropriate tests depends on the nature of the data. This is only meant as a startting point to think deeper about paired data.

This code is part of a larger set of custom R codes I am compiling so I can import my code using source technique.

*For paired-tests, what is important is whether or not the DIFFERENCES between pairs are normally-distributed. Each of the datasets themselves need not be normally distributed and yet a normal t-test would still be appropriate.