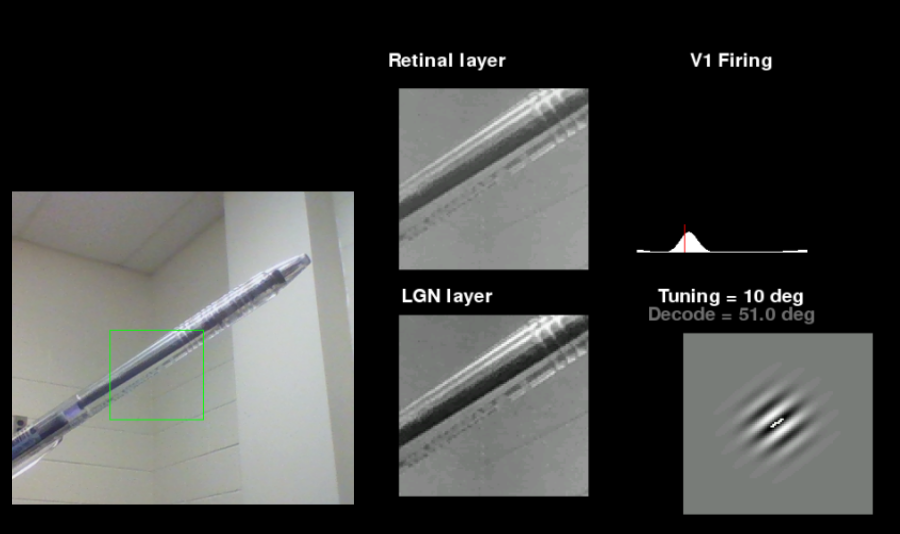

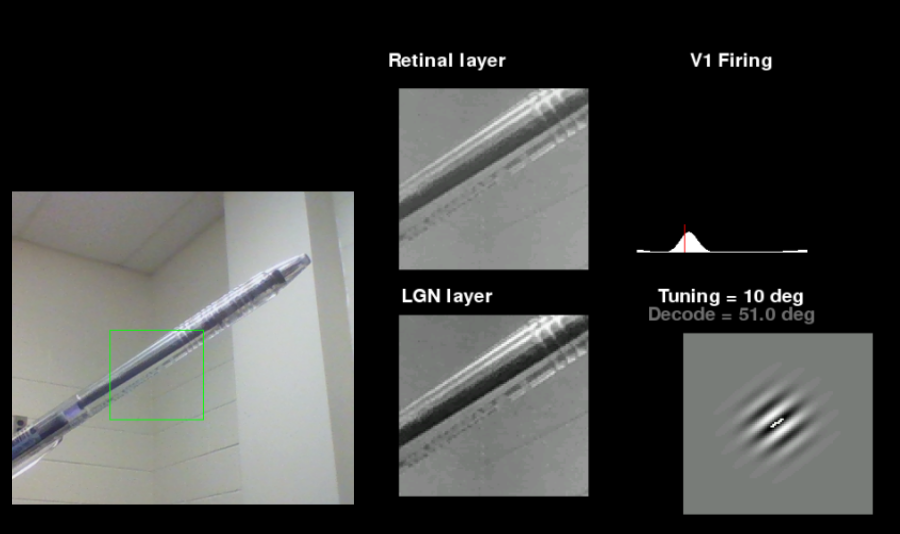

Part of my research revolves around the idea that V1 neurons can be tuned by stimulus statistics. Given that, I thought it would be interesting to develop a kind of computer vision model that would dynamically tune itself. This was part of a tech demo I cobbled together in an afternoon to show that the computer vision part was not hard to do.

The idea is simple. the "Retinal" layer is simply what a webcam sees. The "LGN" layer maximises the contrast based on the part of the 'retina' that is selected. Information from the LGN layer is fed to the V1 layer, which has 'neurons' that code for each possible integer value of orientation. This was done by Gabor filtering the LGN image, the matrix sum of which corresponds to the 'activity' of a given V1 neuron. Then it was a simple matter of decoding the population firing across the neurons.

Granted, the system is not tuning itself yet. I have been developing a model for that without the use of a camera for practical purposes (chiefly, so I can simulate runs without having to show stimulus to a camera in real-time). But the model I made for my thesis seems to work well, and manages to make predictions about human performance that we were able to validate (manuscript in progress). Perhaps then it is time to apply that model to this camera application?

Here is the Python code used for the camera.