The sciki-learn website has an example of how AdaBoost affects the estimate over a decision tree, for a sinusoidal function. The example uses 300 estimators, but is that enough? How much is enough?

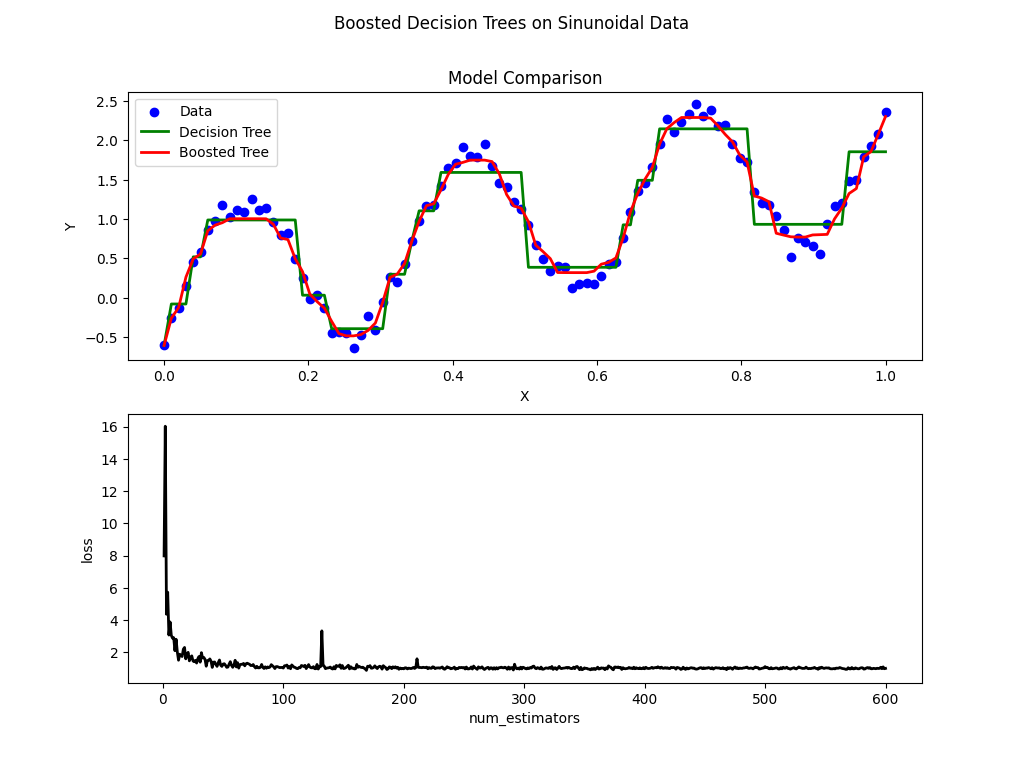

Really, what we want to know is how the fit changes as the number of boosts increases. For that, let's take a look at the loss function across number of boosts (green line is the base decision tree model, red line uses 600 estimators/boosts):

For this case, looks like the indicated 300 boosts is sufficient. The loss (sum of square errors) function flattens out before that.

Clearly boosting the decision tree has a beneficial effect on the fit of the model, but it also is not perfectly predicting the sinusoidal pattern

Python/Scikit-Learn code used (modified from the official example).